Powerpoints

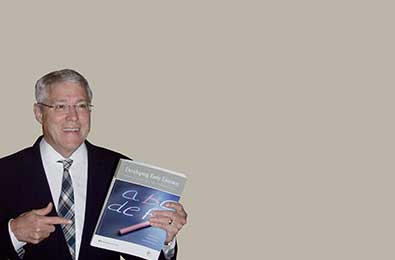

You can download slides from Tim’s presentations

Blast from the Past: This blog entry first posted on May 5, 2018, and re-posted on April 20, 2024. The reason for this re-post is twofold: I received the letter below from an educational consultant who was troubled about how some school dis...

Teacher question: One of the most important activities in my class is guided reading. Not the “Guided Reading” program (we use a textbook) but group work with the children reading under my guidance. Some of our teachers do this with the whole class. I think it works better the way I do it, with small reading groups. The students read the text and I ask questions and we talk about it. In my experience that is helpful. Is there any research supporting that? Shanahan response: First, let’s set aside the term “guided reading.” It now appears to a be a wholly owned...

Blast from the Past: This piece first posted on February 7, 2017, and was reposted on March 23, 2024. Nothing to change or update here, but given recent questions and discussions on social media, I think it would be worthwhile to revisit th...

Here are links to some of Tim’s favorite literacy resources.

What books about literacy does Tim recommend?

National and international charities that support literacy that receive high Charity Navigator ratings. Put your money where your love is.

Your chance to see Tim talk about literacy—right on your own device.

Copyright © 2024 Shanahan on Literacy. All rights reserved. Web Development by Dog and Rooster, Inc.